The Case

El Puente returns! This week he needs some help finding a deleted vodcast between none other than the amazing Scott Hanselman and Professor Smoke (yay! #TeamProfSmoke). Apparently the Prof got a little carried away and spilled the beans on his world domination top-secret plans. Why do the “bad guys” always monologue?

It seems the video was deleted, but may still be in a backup somewhere. El P has obtained some logs for us to chew through to find that incriminating video.

The Data

As usual, let’s dive into the data

StorageArchiveLogs

| count

17458756 records.

StorageArchiveLogs

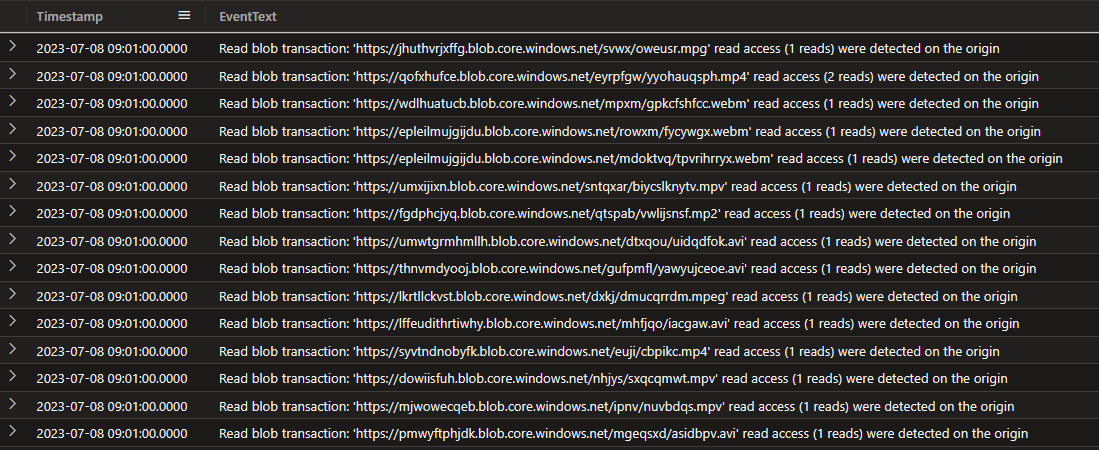

| take 1000

So we have a timestamp and a log entry. But we also have some clues: 1 Scott uploads weekly 2 Most weeks have thousands of views 3 The video was deleted and as far as we know, a replacement was not uploaded for that week.

Sounds like some more anomaly detection is in order.

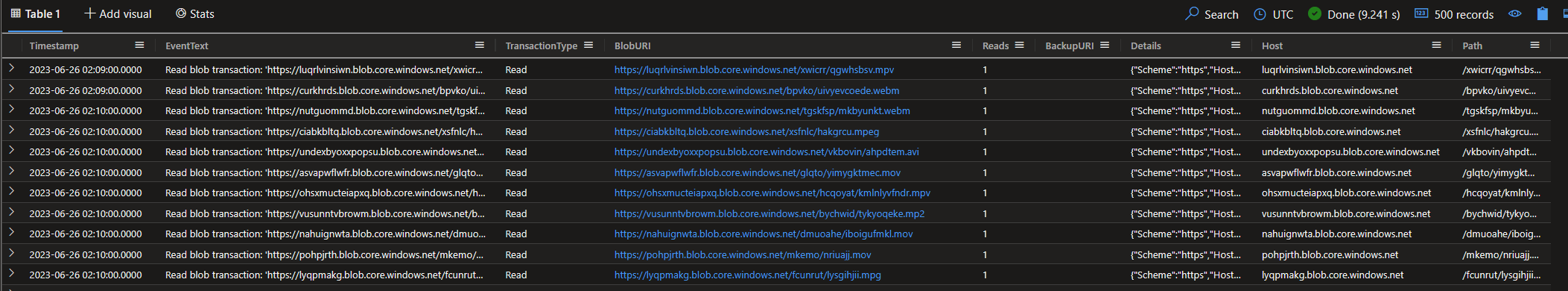

Let’s start by cracking the logs into something more useful. Kusto is super fast, but I prefer to materialise my data, especially if I’m going to be doing a lot of exploratory queries. So let’s create a new table with the data we need.

.set-or-replace StorageArchiveLogsEx <|

StorageArchiveLogs

| parse EventText with TransactionType " blob transaction: '" BlobURI "'" *

| parse EventText with * "(" Reads:long "reads)" *

| parse EventText with * "backup is created on " BackupURI

| extend Details=parse_url(BlobURI)

| extend Host = tostring(Details.Host), Path=tostring(Details.Path)

Here I’ve parsed each log entry into something we can later filter and aggregate on easily. (This is straight from the training btw)

Since we’re going to be doing some timeseries analysis, let’s just quickly create another cache table.

.set-or-replace StorageArchiveLogsTs <|

StorageArchiveLogsEx

| make-series Reads=sumif(Reads, TransactionType == 'Read') on Timestamp step 1d by Host

Now we can dive in.

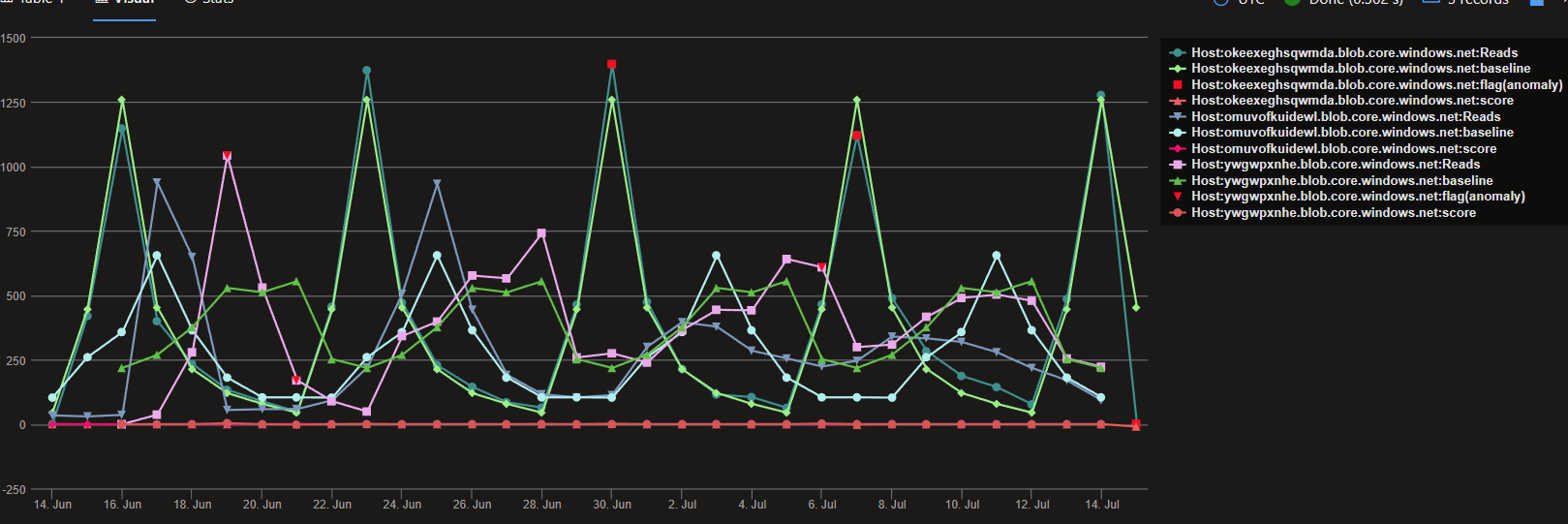

First, let’s find all the videos that have a weekly periodicity to their views. Let’s look for a score > 0.6 as an initial threshold.

StorageArchiveLogsTs

| extend (period,score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| render timechart with (xcolumn=Timestamp, ycolumns=Reads)

So it seems we have a few candidates, with one in particular that looks like a good match.

Next let’s look for any anomalies for those potential hosts. We’re looking for a negative flag result

StorageArchiveLogsTs

| where Host in (

(StorageArchiveLogsTs

| extend (period,score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| project Host )

)

| extend(flag, score, baseline) = series_decompose_anomalies(Reads)

| where array_index_of(flag, -1) > 0

| render anomalychart

Let’s put it all together and filter out anomaly scores > -5

StorageArchiveLogsEx

| where TransactionType == "Delete" and Host in (

(StorageArchiveLogsTs

| where Host in (

(StorageArchiveLogsTs

| extend (period,score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| project Host )

)

| extend(flag, score, baseline) = series_decompose_anomalies(Reads)

| where array_index_of( flag, -1) > 0 and array_sort_asc(score)[0] < -5

)

)

| join kind=inner (StorageArchiveLogsEx | where TransactionType == "Create") on $left.Host == $right.Host and $left.BlobURI == $right.BlobURI

| project BackupURI = BackupURI1

Boom!

Case solved.