On Knowing

Something I love about my job at Microsoft is the way asking for help is not only encouraged, it’s practically mandatory. No-one can truly know everything about waves hands the cloud ( shh, spoilers: it’s really just a bunch of containers on top of four elephants on a giant turtle) and knowing that it’s ok to not only know you don’t know, but to know you know someone that knows all about that thing you don’t know, and knowing it’s totally ok to actually admit you don’t know but you know someone that does who’ll only be too happy to help out, is….a very long run-on sentence. You get the point, asking is good, helping is good.

It was

octarineAzure, the colour of magic. It was alive and glowing and vibrant and it was the undisputed pigment of the imagination, because wherever it appeared it was a sign that mere matter was a servant of the powers of the magical mind. It was enchantment itself. But Rincewind always thought it looked a sort of greenish-purple.

So when a colleague from another team asked for some help with a customer, I knew he knew we both might not know the answer, but I’d know who would.

That’s enough knowing for one blog post.

The Point

So, today’s episode of hashtag the email that became a meeting that became a blog post is about automation, and in particular automating adding users to RLS roles in Power BI.

There are a bunch of ways of doing things manually in Power BI, and even more ways of automating (because nobody in IT signed up for manual labour). Powershell commandlets, calling the Admin REST API directly (from powershell probably), using Tabular Editor to run code scripts against the XMLA endpoint (probably wrapped in a Powershell script) - TMTOWTDI.

There’s also several APIs available, and for manipulating the tabular model, we can use the Tabular Object Model API.

The Scenario

Imagine we have some kind of ITSM tool where a user can request access to a dataset. This request would most likely workflow through a bunch of approvals with the ticket being updated with the correct role, workspace, dataset and other technical details. Or perhaps our HR system can provision roles based on job function, again driven by some kind of CMDB/catalogue/spreadsheet-stored-in-Sharepoint, you get the idea.

The problem then is to expose an endpoint that can be used by these other systems to add users into a role in a particular Analysis Services database aka Power BI dataset.

“Why not just use groups?” I hear you say

Yes, adding AAD groups to roles and managing group membership is totes the preferred way. But sometimes this is not possible for “reasons”.

The Idea

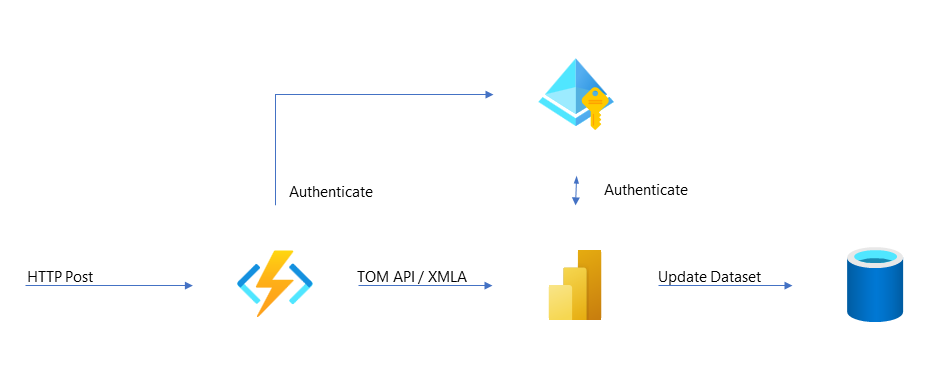

For our PoC we’re going to use an Azure Function and the TOM API to show how easy it is to update the model.

An HTTPS endpoint, secured by AAD, receives a json payload of workspace, dataset, user and role. Using the TOM API, the function authenticates via an app registration/service principal, locates the dataset and adds the user to the requested role.

Create The Function

Pre-reqs

Install the pre-reqs in VS Code: https://learn.microsoft.com/en-us/azure/azure-functions/functions-run-local

Scaffold and Packages and Bears, Oh My!

Scaffold a new function

func new --template "Http Trigger" --name AddUserToRoleFunc

and add the required TOM packages

dotnet add package Microsoft.AnalysisServices.NetCore.retail.amd64

dotnet add package Microsoft.AnalysisServices.AdomdClient.NetCore.retail.amd64

Start Writing the Function

Add imports to the code

using Microsoft.AnalysisServices;

using Microsoft.AnalysisServices.Tabular;

using System.Web;

We’re going to accept a simple JSON request with Workspace, Dataset, UPN and Role. Define a POCO:

public class UserRoleRequest

{

public string Workspace { get; set; }

public string Dataset { get; set; }

public string Role { get; set; }

public string Upn { get; set; }

}

Next update the function method signature to automagically bind the POSTed json to a UserRoleRequest object:

public static class AddUserToRoleFunc

{

[FunctionName("AddUserToRoleFunc")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = "addusertorole")]

UserRoleRequest req,

HttpRequest hreq,

ILogger log)

{

The method body will first grab some config from the Environment (in our case a local.settings.json) file, build the connection string using these details along with the workspace name from the request payload.

if( req == null ) return new BadRequestResult();

string tenantId = Environment.GetEnvironmentVariable("TenantId");

string appId = Environment.GetEnvironmentVariable("AppId");

string appSecret = Environment.GetEnvironmentVariable("AppSecret");

string workspaceConnection = HttpUtility.UrlPathEncode($"powerbi://api.powerbi.com/v1.0/myorg/{req.Workspace}");

string connectString = $"DataSource={workspaceConnection};User ID=app:{appId}@{tenantId};Password={appSecret};";

Add The TOM Logic

Now for the main logic which will:

- Connect to the Power BI Workspace (aka the AS Server)

- Find the dataset (aka the AS Database )

- Create a new

Memberobject, specifically anExternalModelRoleMember - Add this to the

Model.Rolescollection - Save the model and disconnect

Note: Error handling is basic to say the least in this example.

try{

Microsoft.AnalysisServices.Tabular.Server server = new Microsoft.AnalysisServices.Tabular.Server();

server.Connect(connectString);

log.LogInformation("Connected");

log.LogInformation($"Adding {req.Upn} to {req.Role} in {req.Dataset}");

var member = new ExternalModelRoleMember();

member.MemberType = RoleMemberType.User;

member.MemberName = req.Upn;

member.IdentityProvider = "AzureAD";

var database = server.Databases.GetByName(req.Dataset);

database.Model.Roles[req.Role].Members.Add(member);

database.Model.SaveChanges();

server.Disconnect();

return new OkResult();

}

catch (Exception ex)

{

log.LogError(ex.ToString());

return new UnprocessableEntityResult();

}

The final function should look like this:

using System;

using System.IO;

using System.Text;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using System.Net;

using Microsoft.AnalysisServices;

using Microsoft.AnalysisServices.Tabular;

using System.Web;

namespace TabularScripting

{

public class UserRoleRequest

{

public string Workspace { get; set; }

public string Dataset { get; set; }

public string Role { get; set; }

public string Upn { get; set; }

}

public static class AddUserToRoleFunc

{

[FunctionName("AddUserToRoleFunc")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = "addusertorole")]

UserRoleRequest req,

HttpRequest hreq,

ILogger log)

{

if( req == null ) return new BadRequestResult();

string tenantId = Environment.GetEnvironmentVariable("TenantId");

string appId = Environment.GetEnvironmentVariable("AppId");

string appSecret = Environment.GetEnvironmentVariable("AppSecret");

string workspaceConnection = HttpUtility.UrlPathEncode($"powerbi://api.powerbi.com/v1.0/myorg/{req.Workspace}");

string connectString = $"DataSource={workspaceConnection};User ID=app:{appId}@{tenantId};Password={appSecret};";

try{

Microsoft.AnalysisServices.Tabular.Server server = new Microsoft.AnalysisServices.Tabular.Server();

server.Connect(connectString);

log.LogInformation("Connected");

log.LogInformation($"Adding {req.Upn} to {req.Role} in {req.Dataset}");

var member = new ExternalModelRoleMember();

member.MemberType = RoleMemberType.User;

member.MemberName = req.Upn;

member.IdentityProvider = "AzureAD";

var database = server.Databases.GetByName(req.Dataset);

database.Model.Roles[req.Role].Members.Add(member);

database.Model.SaveChanges();

server.Disconnect();

return new OkResult();

}

catch (Exception ex)

{

log.LogError(ex.ToString());

return new UnprocessableEntityResult();

}

}

}

}

Payload

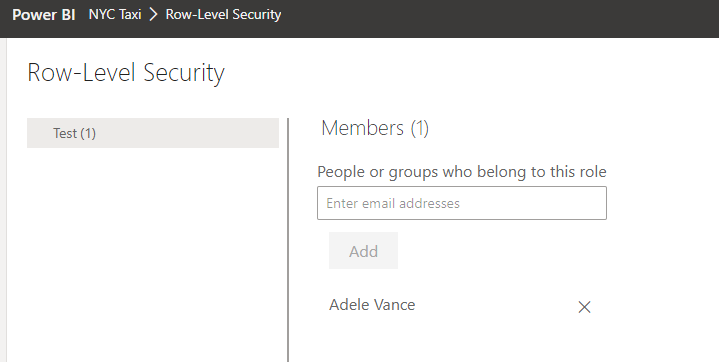

If we have a workspace NYC Taxi for reports based on the ubiquitous NYC Taxi data, NYCTLC, and a user AdeleV to be added to the role Cabbie, we’d post this json.

{

"workspace":"NYC Taxi",

"upn":"adelev@XXXXX.com",

"role": "Cabbie",

"dataset":"NYCTLC"

}

As we’ve added our POCO class to the Function’s Run method signature, the Function will take care of binding this JSON.

Security

Almost there. We’re going to need a App Registration / Service Principal to authenticate the function to the Power BI service.

Head into the Azure portal to create a new service principal and group. Configure the Power BI service with this group and also grant the group Contributor on the workspace.

Add service principal’s TenantId, AppId and AppSecret to the Values section of the local.settings.json file.

{

...

"Values": {

...

"TenantId": "...",

"AppId": "...",

"AppSecret": "..."

}

}

Make sure .gitignore has an entry to ignore your local.settings.json or your secrets will end up on github.

# Azure Functions localsettings file

local.settings.json

Test It Out Locally

func start

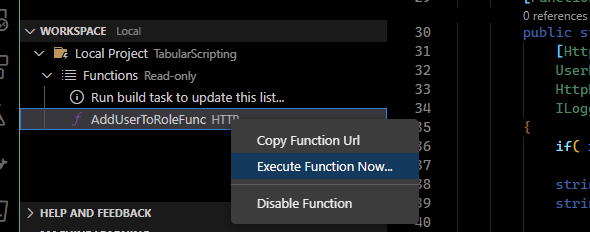

Post some json in VS Code by right clicking on the function and selecting Execute. Paste a payload into the dialog box

Check the service and we can see our user has been added.

(Yes, I didn’t update the name of my Test role. Busted.)

Improvements

The code should now be running locally, but definitely needs a few things before deploying to Azure

- Security. There are a number of ways of securing Functions

- Credentials. We’re using a local.settings.json to store our dev creds, for production, these should be in Key Vault

- Error handling. Ideally, follow the guidelines in Handling errors in the TOM API

But for now, we have the beginnings of a function that can add users to RLS roles.